How does Medallion Architecture Ensures Data Quality in Lakehouse?

Data is the most valuable resource for a business to analyze and view insights for better decision-making. However, massive data flow makes data processing and analyzing challenging for business owners. A reason why enterprises are exploring outside the traditional data architecture to meet their data processing and analytical needs.

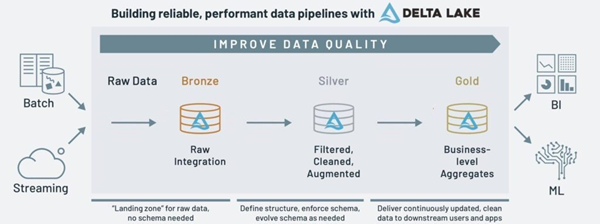

Databricks solved this issue using Medallion Architecture along with the Delta Lake Framework in Lakehouse. This Architecture ensures ACID (Atomicity, Consistency, Isolation, and Durability) by making data pass through numerous levels of transformations and validations before storing it in a format designed for analysis.

What challenges does Medallion Architecture solve?

Despite all the development and potential cloud computing offers to businesses, there are still many challenges that can become a roadblock to organizational growth. The following list mentions the crucial challenges that need to be taken care of to leverage the maximum capability of the cloud.

● Lack of transaction support.

● Hard to enforce data quality.

● Complications to mix appends, update, and delete in the data lake.

● Data governance in the lake results in data swamps instead of data lakes.

● A complex data model that is difficult to understand and implement.

However, with the Medallion approach, these challenges can not only be overcome but also translate into opportunities for organizational evolution.

What is Medallion Architecture? How does it work?

A medallion architecture also referred to as “multi-hop” architecture, is a data design pattern used to logically organize the data in a lakehouse, with the goal of incrementally and progressively enriching the data as it flows through each layer of the architecture (from Bronze ⇒ Silver ⇒ Gold layer tables).

Image Source: Databricks

The Bronze layer (Raw data)

This layer contains the unprocessed or raw data (can be any combination of streaming and batch transactions) ingested from various data sources and maintains them as it is in their state. The table structure in this layer resembles that of the source system table structure with any additional metadata columns that contain the load date/time, file name, Load ID, etc.,

The Silver layer (Filtered/Cleansed/Validated data)

Data is retrieved from the bronze layer and added to the silver layer after being filtered, cleaned, trimmed, and other operations to validate the data. In this layer, we apply business rules to transform the data into the desired format. With the help of this layer, the data is transformed into an enterprise perspective, enabling use cases like ad-hoc reporting, sophisticated analytics, and machine learning.

The Gold layer (Enriched data)

We gather the data in this layer from the silver layer, which is then prepared and aggregated for business use. Data quality and business logic principles are applied to enrich the data into the format used for analytics purposes. Data analysts and Business analysts are highly relied on this layer to view insights from the data.

Benefits of a Lakehouse Architecture

Organizations generate data from various sources like IoT devices, OnPrem source systems, and numerous databases, aggregating them in a data lake for analytics and machine learning activities. To get the crux from it, it needs to comply with a modern data architecture that allows them to move data between data lakes and purpose-built data stores efficiently. It helps to provide –

● Transaction support: Data pipelines would read or write data simultaneously in an enterprise lakehouse. The ACID properties guarantee consistency as many parties concurrently read or write data, typically using SQL.

● Schema enforcement and governance: It ensures data quality by rejecting insufficient data which does not fulfill the schema defined for the table.

● BI support: It strongly supports BI tools directly on the source data.

● Decoupled storage from computing: Here, it has separate storage and computes clusters requiring fewer efforts to take scale to many more concurrent users and data sizes.

● Openness: It supports open and standardized, such as Parquet, and they provide an API so numerous tools and engines can efficiently access the data directly.

● Support for diverse data types ranging from unstructured to structured data: It provisions structured, semi-structured and unstructured data like video, streaming data, etc.

● Support for diverse workloads: Its supports data science, machine learning, SQL, and analytics.

● End-to-end streaming: Streaming and real-time data presentation require an hour for industries. It supports Batch, Streaming both in the lakehouse context.

Want better Data Quality?

If so, we are happy to assist you. As the data moves through the architectural layers of the lakehouse, the data quality is improved. iLink Digital offers you robust data processing techniques, framework, strategies, methodology, and road map to swiftly deploy this lakehouse architecture. Check out our service portfolio to learn how you can start implementing this architecture effectively for your project.

Links and References